Google’s annual I/O developer conference is back! On May 20 and May 21, the tech giant is expected to drop a boatload of news and updates on everything from Gemini AI to Android 16 to Android XR, its newest platform for augmented and mixed reality headsets and smart glasses. There will be so much AI shoved into every service and device, you might get a headache trying to keep up with the blitz of news.

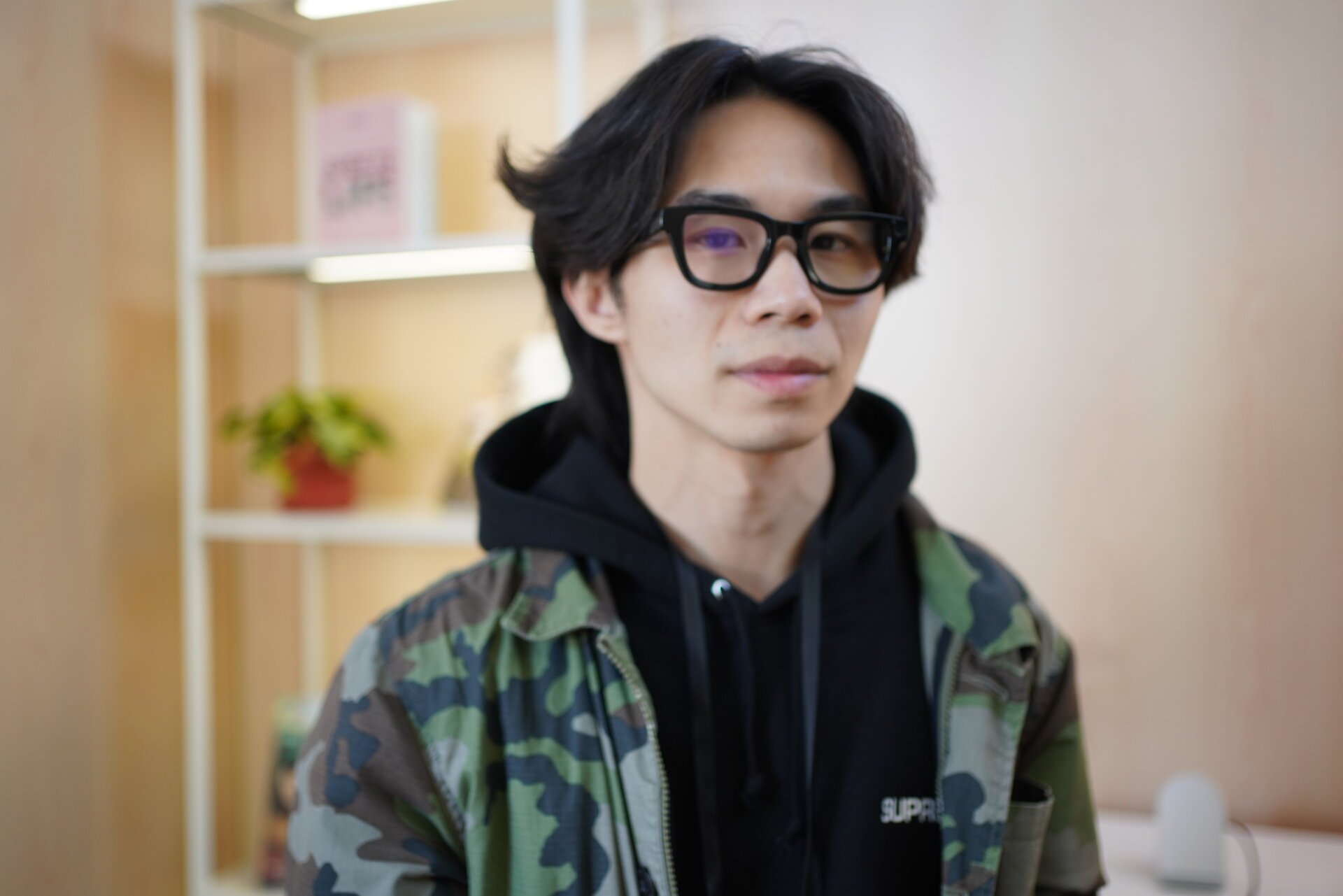

Lucky for you, Gizmodo is here to help you make sense of it all by sifting through the hours of keynotes and developer sessions to bring you what really matters. As we’ve been doing since Gizmodo was founded over 20 years ago, we won’t be holding back on calling a spade a spade. Senior Consumer Tech Editor Raymond Wong will be on the ground at Google’s Mountain View, Calif.-based HQ reporting on everything he can get his hands on.

Keep this page bookmarked Tuesday and Wednesday as Raymond, Senior Writer James Pero, and Consumer Tech Reporter Kyle Barr cut through all the AI noise. We promise we won’t use Gemini to live blog for us.

Good Game, Everyone! Now Hit the Showers

Well, folks, another Google I/O is in the books, and I have to say…. I’m sleepy. That was a lot, but the good news is we got a glimpse (emphasis on the glimpse part) at a lot of interesting previews of what’s to come in XR glasses, shoppable AI, web search, video generation, and the surprisingly jittery future of 3D video conferencing.

How all of that will end up is anyone’s guess, but Google has laid out a fairly concrete vision of its future, and to no one’s surprise, that centers almost entirely around AI. Our hands-on with Google’s gadgets by way of Gizmodo’s Ray Wong, Senior Editor, Consumer Tech, may have been brief, but who knows what the future has in store—this likely isn’t the last we’ve seen of Project Aura, Google and Xreal’s smart glasses. For now, there’s a lot to marinate on, and naturally, the minute we have more to test, share, or complain about when it comes to the ever-expanding Google universe, you’ll be the first to know.

Good Googling, ya’ll. Signing off —James Pero

Goodbye San Fran/Mountain View. See you again. Very soon 😉! pic.twitter.com/Kqu72m1Emo

— Ray Wong (@raywongy) May 22, 2025

(Google) Beam Me Up… and Into Your TV

There’s something about how… normal Google Beam is that makes it not as mind-blowing as I thought it would be. Don’t get me wrong, it’s very high-tech wizardry that captures multiple streams of 2D video and then stitches them into a glasses-free 3D version of a person. As I wrote in my hands-on below:

“Maybe that’s a blessing in disguise—there’s no shock factor (not for me, at least), which means the Beam/Starline technology has done its job (mostly) getting out of the way to allow for genuine communication.”

—Raymond Wong

All Alone in the Press Lounge

Google I/O Day 2 is… kind of a ghost town this year. Either everyone is really fatigued from all the AI announcements Google made during its keynote yesterday or attendee numbers are much lower this year? This press room was packed yesterday—today, like four people showed up. I’m the only one inside right now. I can’t tell if that’s sad or whether I should be sad that I bothered coming in. To be fair, I had to charge up my MacBook Air… —Raymond Wong

Veo 3 Gone Wild

Google’s new Veo 3 video generator is now available via preview, and people are naturally putting the model to the test. The results are pretty wild, about as wild as OpenAI’s Sora at first glance. Now, what exactly people are going to use Veo 3 for is a different question entirely, but I don’t really love this example of someone faking a Twitch stream of Fortnite. —James Pero

Uhhh… I don't think Veo 3 is supposed to be generating Fortnite gameplay pic.twitter.com/bWKruQ5Nox

— Matt Shumer (@mattshumer_) May 21, 2025

I’ll Be Going Without the Flow, Thanks

Listen, I don’t dislike AI on principle. There are tons of things that I think it can, and will, do well. I want agentic AI to order my Uber, or do my taxes, or I don’t know, streamline the labyrinthine advertisement that is now web search. One thing I do not want AI to do, however, is make movies.

As much as I think tools like Google Flow, which it describes as an AI filmmaker, are a technical feat, I’m not ready to see human creativity take a backseat. My advice? Don’t put down the camera quite yet, I think filmmaking the old-school way is here to stay. —James Pero

Hey Google, What’s With These Short-Ass Demos?

I swear I’m not trying to be annoying. But I am very frustrated that Google keeps giving press—or is it just me?!—like less than two minutes to demo its new AI products. Yesterday, I had 90 seconds to try out Gemini on prototype Android XR smart glasses. Minutes ago, I went to try Google Beam, the company’s video calling TV hardware that replicates caller and receiver in glasses-free 3D as if they’re sitting right in front of you. I sat down, a nice guy named Jerome reached out with his hands and spoke for a few seconds, and then quickly told me that was it. I barely got in a word. I’m writing up my thoughts based on my ridiculously short demo. Check back in a few. —Raymond Wong

I just tried Google Beam, which was actually the older Project Starline setup. It was my first time checking it out. I had like a minute to chat before the guy on the other end was like "unfortunately we have a set time and this has been great."

What is with these super short… pic.twitter.com/mighUOlEVS

— Ray Wong (@raywongy) May 21, 2025

For Google Glass, Hindsight is 20/20

I’m going to say something controversial: Google Glass was not a hit. For anyone that might be unaware of the Google Glass legacy, that’s obviously a joke, seeing as how the gadget spawned one of the most memorable pejoratives in consumer tech history; Glasshole.

When the pair of smart glasses launched back in 2013, it was a different world for one, but according to the glasses’ creator Sergey Brin, some, um, missteps were also made.

“I definitely feel like I made a lot of mistakes with Google Glass, I’ll be honest,” Brin said in a fireside chat during Google’s I/O conference yesterday.

“I just didn’t know anything about consumer electronic supply chains, really, and how hard it would be to build that and have it at a reasonable price point and managing all the manufacturing and so forth,” he continued.

That admission, however honest, probably doesn’t do much to quell the sting Brin must feel seeing the category ascend via Google, Meta, and, sometime soon, maybe Apple and Samsung. Sorry, Sergey, sometimes things just happen too fast, too soon. —James Pero

Just a Glimmer of Google’s XR Glasses

As Gizmodo’s Senior Editor, Consumer Tech, Ray Wong, explained, we did not get a lot of time with Google’s XR glasses. We did, however, have enough time to take a little video of what they look like, what we actually got to see, and what the experience of getting to see them was like. Spoiler alert: This video is about as long as the demo Ray got. —James Pero

My 90 Seconds With the Android XR Smart Glasses

I wish I was making this stuff up, but chaos seems to follow me at all tech events. After waiting an hour to try out Google’s hyped-up Android XR smart glasses for five minutes, I was actually given a three-minute demo, where I actually had 90 seconds to use Gemini in an extremely controlled environment. And actually, if you watch the video in my hands-on write-up below, you’ll see that I spent even less time with it because Gemini fumbled a few times in the beginning. Oof. I really hope there’s another chance to try them again because it was just too rushed. I think it might be the most rushed product demo I’ve ever had in my life, and I’ve been covering new gadgets for the past 15 years. —Raymond Wong

Waiting Game… to Try Android XR Smart Glasses or Samsung Project Moohan

Google, a company valued at $2 trillion, seemingly brought one pair of Android XR smart glasses for press to demo… and one pair of Samsung’s Project Moohan mixed reality headset running the same augmented reality platform. I’m told the wait is 1 hour to try either device for 5 minutes. Of course, I’m going to try out the smart glasses. But if I want to demo Moohan, I need to get back in line and wait all over again. This is madness! —Raymond Wong

Samsung Project Moohan is out here. Only 1 hr to wait for a press demo… pic.twitter.com/TROjJcYDjQ

— Ray Wong (@raywongy) May 20, 2025

Keynote Fin

Talk about a loooooong keynote. Total duration: 1 hour and 55 minutes, and then Sundar Pichai walked off stage. What do you make of all the AI announcements? Let’s hang in the comments! I’m headed over to a demo area to try out a pair of Android XR smart glasses. I can’t lie, even though the video stream from the live demo lagged for a good portion, I’m hyped! It really feels like Google is finally delivering on Google Glass over a decade later. Shoulda had Google co-founder Sergey Brin jump out of a helicopter and land on stage again, though. —Raymond Wong

Project Astra Is In Everything

Pieces of Project Astra, Google’s computer vision-based UI, are winding up in various different products, it seems, and not all of them are geared toward smart glasses specifically.

One of the most exciting updates to Astra is “computer control,” which allows one to do a lot more on their devices with computer vision alone. For instance, you could just point your phone at an object (say, a bike) and then ask Astra to search for the bike, find some brakes for it, and then even pull up a YouTube tutorial on how to fix it—all without typing anything into your phone. —James Pero

A Shopping Bot for Everyone

Shopping bots aren’t just for scalpers anymore. Google is putting the power of automated consumerism in your hands with its new AI shopping tool. There are some pretty wild ideas here, too, including a virtual shopping avatar that’s supposed to represent your own body—the idea is you can make it try on clothes to see how they fit. How all that works in practice is TBD, but if you’re ready for a full AI shopping experience, you’ve finally got it.

For the whole story, check out our story from Gizmodo’s Senior Editor, Consumer Tech, Raymond Wong. —James Pero

This Android XR Demo is What I’ve Been Waiting For

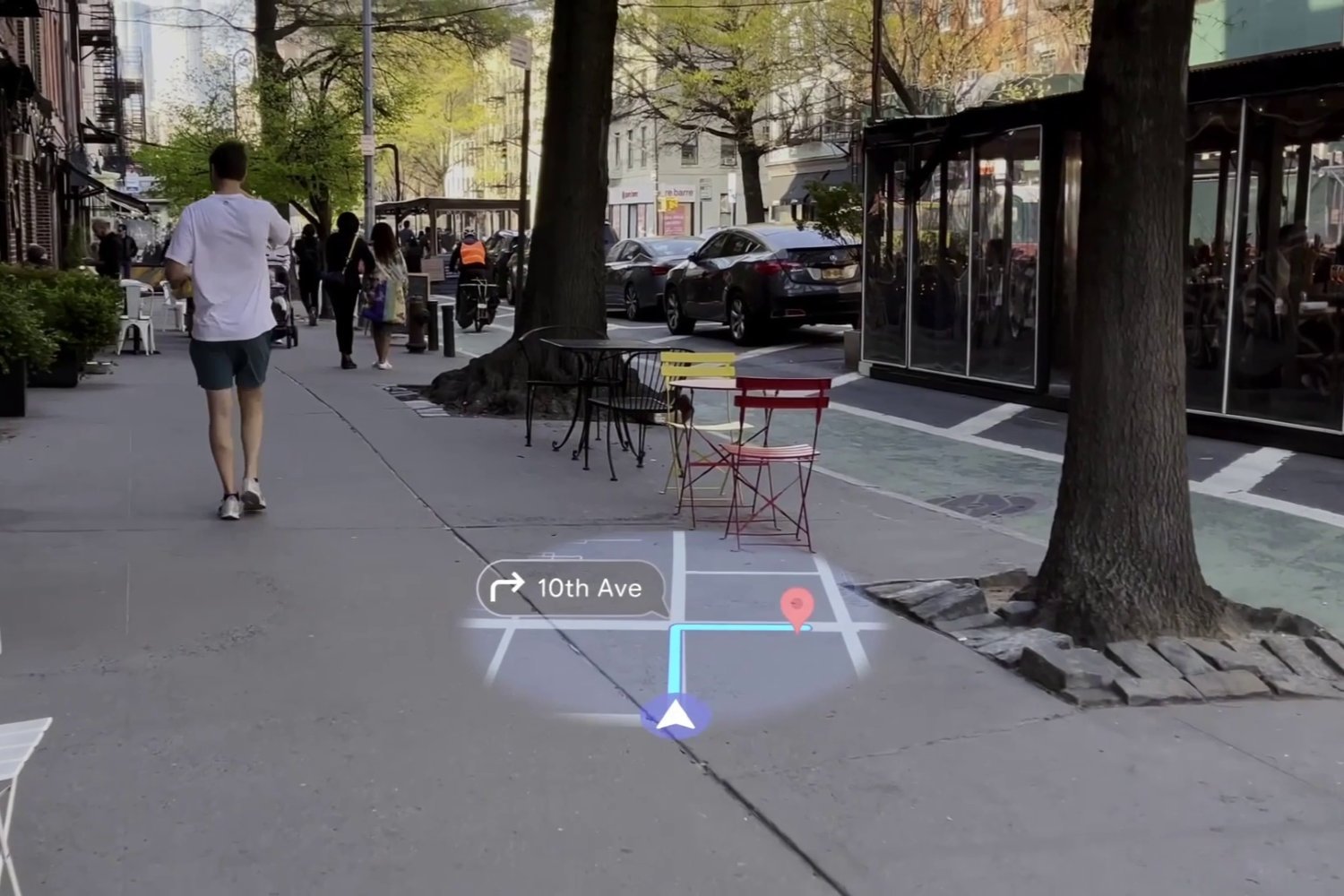

I got what I wanted. Google showed off what its Android XR tech can bring to smart glasses. In a live demo, Google showcased how a pair of unspecified smart glasses did a few of the things that I’ve been waiting to do, including projecting live navigation and remembering objects in your environment—basically the stuff that it pitched with Project Astra last year, but in a glasses form factor.

Google Android XR augmented reality smart glasses demo. Lil cameo by @backlon and @Giannis_An34. the stream lagged but it seems impressive pic.twitter.com/Amxba8eL4j

— Ray Wong (@raywongy) May 20, 2025

There’s still a lot that needs to happen, both hardware and software-wise, before you can walk around wearing glasses that actually do all those things, but it was exciting to see that Google is making progress in that direction. It’s worth noting that not all of the demos went off smoothly—there was lots of stutter in the live translation demo—but I guess props to them for giving it a go.

When we’ll actually get to walk around wearing functional smart glasses with some kind of optical passthrough or virtual display is anyone’s guess, but the race is certainly heating up. —James Pero

Google May Finally Offer a Way Regular Folk Can Test for AI-Generated Fakes

Google’s SynthID has been around for nearly three years, but it’s been largely kept out of the public eye. The system disturbs AI-generated images, video, or audio with an invisible, undetectable watermark that can be observed with Google DeepMind’s proprietary tool. At I/O, Google said it was working with both Nvidia and GetReal to introduce the same watermarking technique with those companies’ AI image generators. Users may be able to detect these watermarks themselves, even if only part of the media was modified with AI. Early testers are getting access to it “today,” but hopefully more people can access it at a later date from labs.google/synthid. —Kyle Barr

Not Sure My Bladder Is Gonna Make It…

This keynote has been going on for 1.5 hours now. Do I run to the restroom now or wait? But how much longer until it ends??? Can we petiton to Sundar Pichai to make these keynotes shorter or at least have an intermission?

Update: I ran for it right near the end before Android XR news hit. I almost made it… —Raymond Wong

Google’s Veo 3 Gets a Big Sound Upgrade

Google’s new video generator Veo, is getting a big upgrade that includes sound generation, and it’s not just dialogue. Veo 3 can also generate sound effects and music. In a demo, Google showed off an animated forest scene that includes all three—dialogue, sound effects, and video. The length of clips, I assume, will be short at first, but the results look pretty sophisticated if the demo is to be believed. —James Pero

Veo 3 can generate dialogue, background music, and sound effects for generated video pic.twitter.com/RiJxLgsmfw

— Ray Wong (@raywongy) May 20, 2025

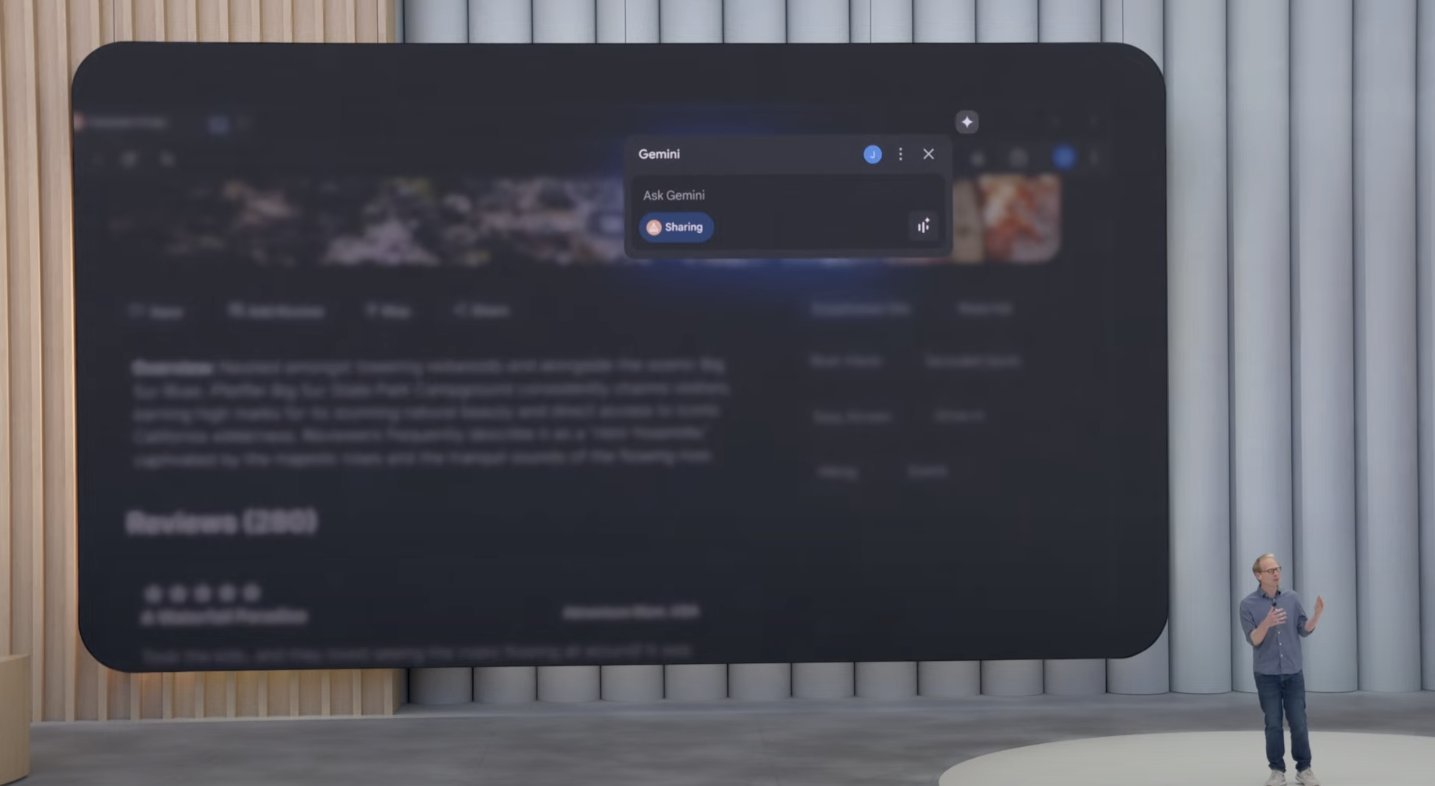

Gemini Will be in Chrome, Just Like Everywhere Else

If you pay for a Google One subscription, you’ll start to see Gemini in your Google Chrome browser (and—judging by this developer conference—everywhere else) later this week. This will appear as the sparkle icon at the top of your browser app. You can use this to bring up a prompt box to ask a question about the current page you’re browsing, such as if you want to consolidate a number of user reviews for a local campsite. —Kyle Barr

Behold: The Most Realistic Video Calling Ever Made?

Google’s high-tech video conferencing tech, now called Beam, looks impressive. You can make eye contact! It feels like the person in the screen is right in front of you! It’s glasses-free 3D! Come back down to Earth, buddy—it’s not coming out as a consumer product. Commercial first with partners like HP. Time to apply for a new job? —Raymond Wong

Read more here:

What if Search Was “Live?”

Google doesn’t want Search to be tied to your browser or apps anymore. Search Live is akin to the video and audio comprehension capabilities of Gemini Live, but with the added benefit of getting quick answers based on sites from around the web. Google showed how Search Live could comprehend queries about at-home science experiments and bring in answers from sites like Quora or YouTube. —Kyle Barr

Search Live pic.twitter.com/B3Ap3yBIQZ

— Ray Wong (@raywongy) May 20, 2025

Android XR is Google Glass, but With AI

Google is getting deep into augmented reality with Android XR—its operating system built specifically for AR glasses and VR headsets. Google showed us how users may be able to see a holographic live Google Maps view directly on their glasses or set up calendar events, all without needing to touch a single screen. This uses Gemini AI to comprehend your voice prompts and follow through on your instructions. Google doesn’t have its own device to share at I/O, but it’s planning to work with companies like XReal and Samsung to craft new devices across both AR and VR. —Kyle Barr

Read our full report here:

One (Very Expensive) AI Subscription to Rule Them All

I know how much you all love subscriptions! Google does too, apparently, and is now offering a $250 per month AI bundle that groups some of its most advanced AI services. Subscribing to Google AI Ultra will get you:

- Gemini and its full capabilities

- Flow, a new, more advanced AI filmmaking tool based on Veo

- Whisk, which allows text-to-image creation

- NotebookLM, an AI note-taking app

- Gemini in Gmail and Docs

- Gemini in Chrome

- Project Mariner, an agentic research AI

- 30TB of storage

I’m not sure who needs all of this, but maybe there are more AI superusers than I thought. —James Pero

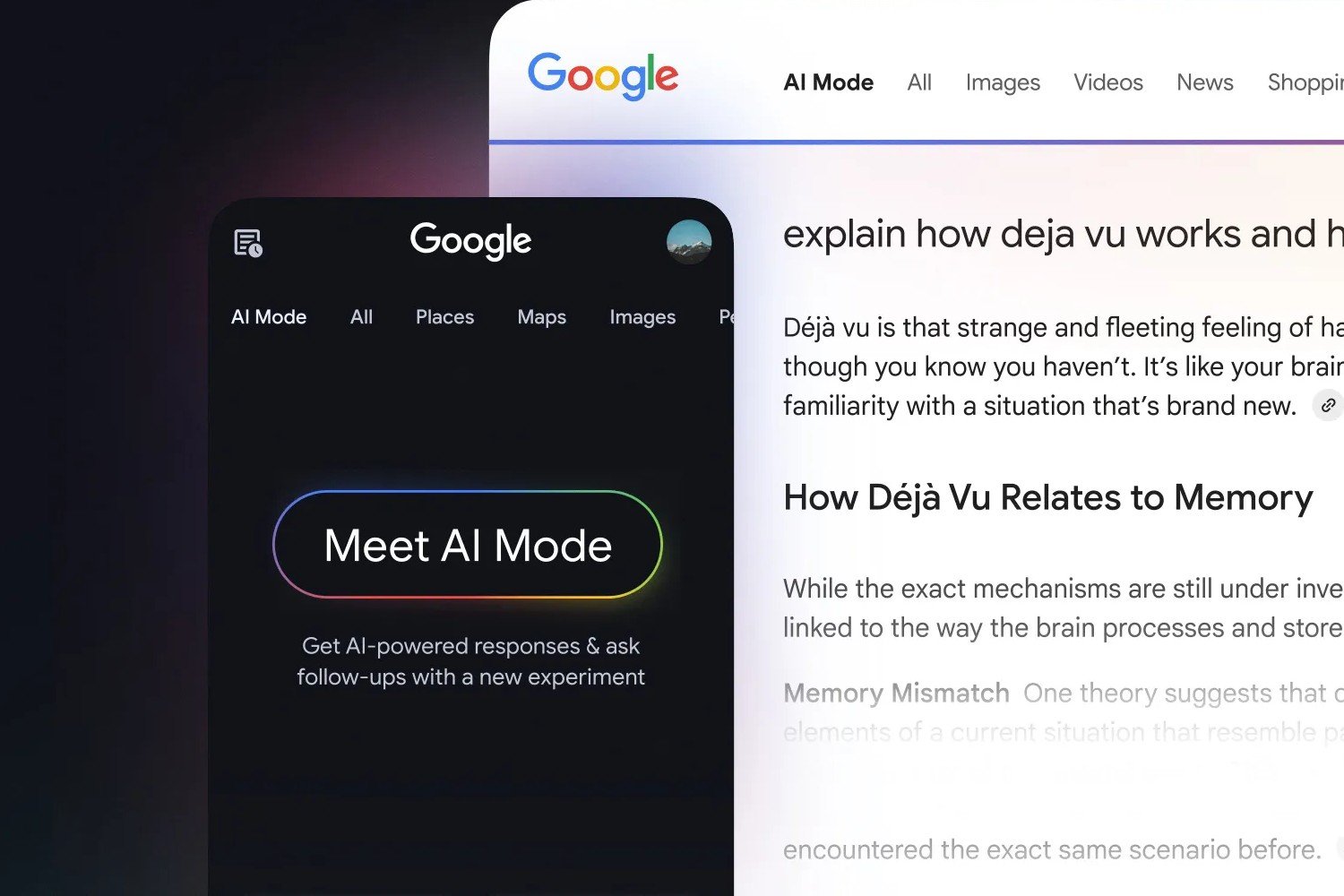

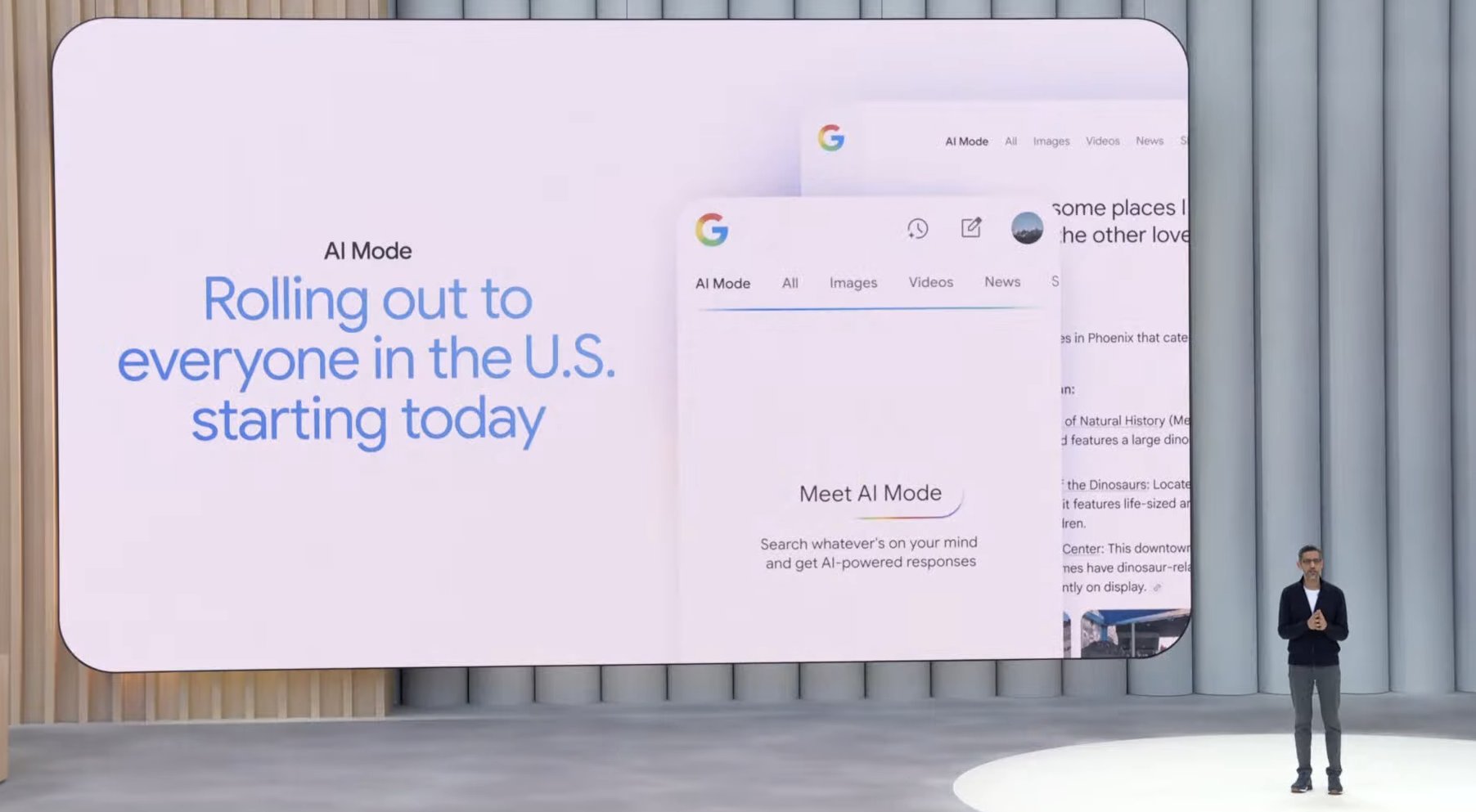

AI-ification of Google Search Expands With an ‘AI Mode’ Tab

Google CEO Sundar Pichai was keen to claim that users are big, big fans of AI overviews in Google Search results. If there wasn’t already enough AI on your search bar, Google will now stick an entire “AI Mode” tab on your search bar next to the Google Lens button. This encompasses the Gemini 2.5 model. This opens up an entirely new UI for searching via a prompt with a chatbot. After you input your rambling search query, it will bring up an assortment of short-form textual answers, links, and even a Google Maps widget, depending on what you were looking for.

AI Mode should be available starting today. Google said AI Mode pulls together information from the web alongside its other data, like weather or academic research through Google Scholar. It should also eventually encompass your “personal context,” which will be available later this summer. Eventually, Google will add more AI Mode capabilities directly to AI Overviews. —Kyle Barr

News Embargo Has Lifted!

Get your butt over to Gizmodo.com’s home page because the Google I/O news embargo just lifted. We’ve got a bunch of stories, including this one about Google partnering up with Xreal for a new pair of “optical see-through” (OST) smart glasses called Project Aura. The smart glasses run Android XR and are powered by a Qualcomm chip. You can see three cameras. Wireless, these are not—you’ll need to tether to a phone or other device.

Update: Little scoop: I’ve confirmed that Project Aura has a 70-degree field of view, which is way wider than the One Pro’s FOV, which is 57 degrees. —Raymond Wong

Gemini Is the Future “Universal Assistant”

Google’s DeepMind CEO showed off the updated version of Project Astra running on a phone and drove home how its “personal, proactive, and powerful” AI features are the groundwork for a “universal assistant” that truly understands and works on your behalf. If you think Gemini is a fad, it’s time to get familiar with it because it’s not going anywhere. —Raymond Wong

Gemini will be made into a "universal AI assistant." That's the end goal says Google pic.twitter.com/21WY3kii82

— Ray Wong (@raywongy) May 20, 2025

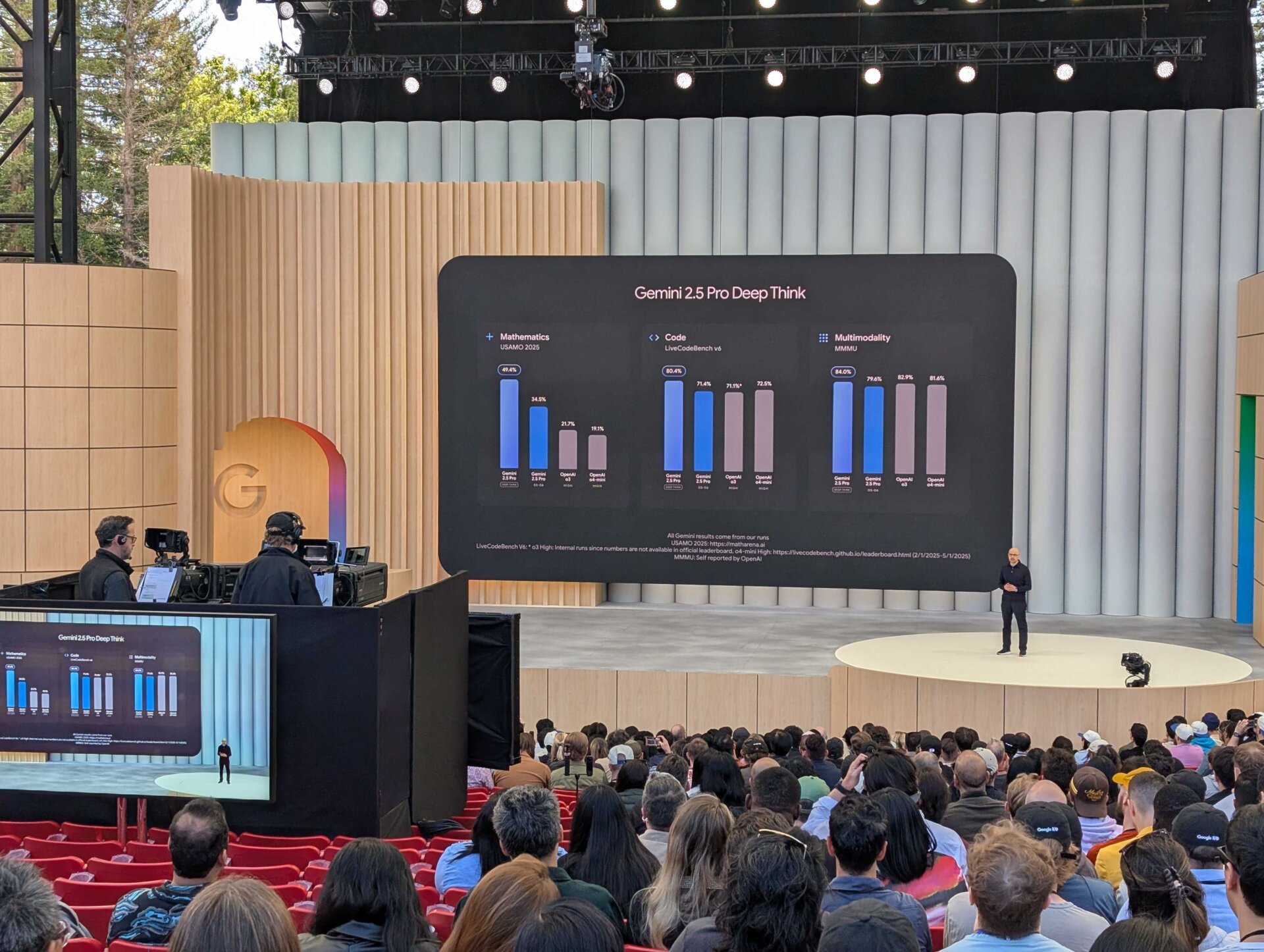

Gemini 2.5 Pro Is Here

Google says Gemini 2.5 Pro is its “most advanced model yet,” and comes with “enhanced reasoning,” better coding ability, and can even create interactive simulations. You can try it now via Google AI Studio. —James Pero

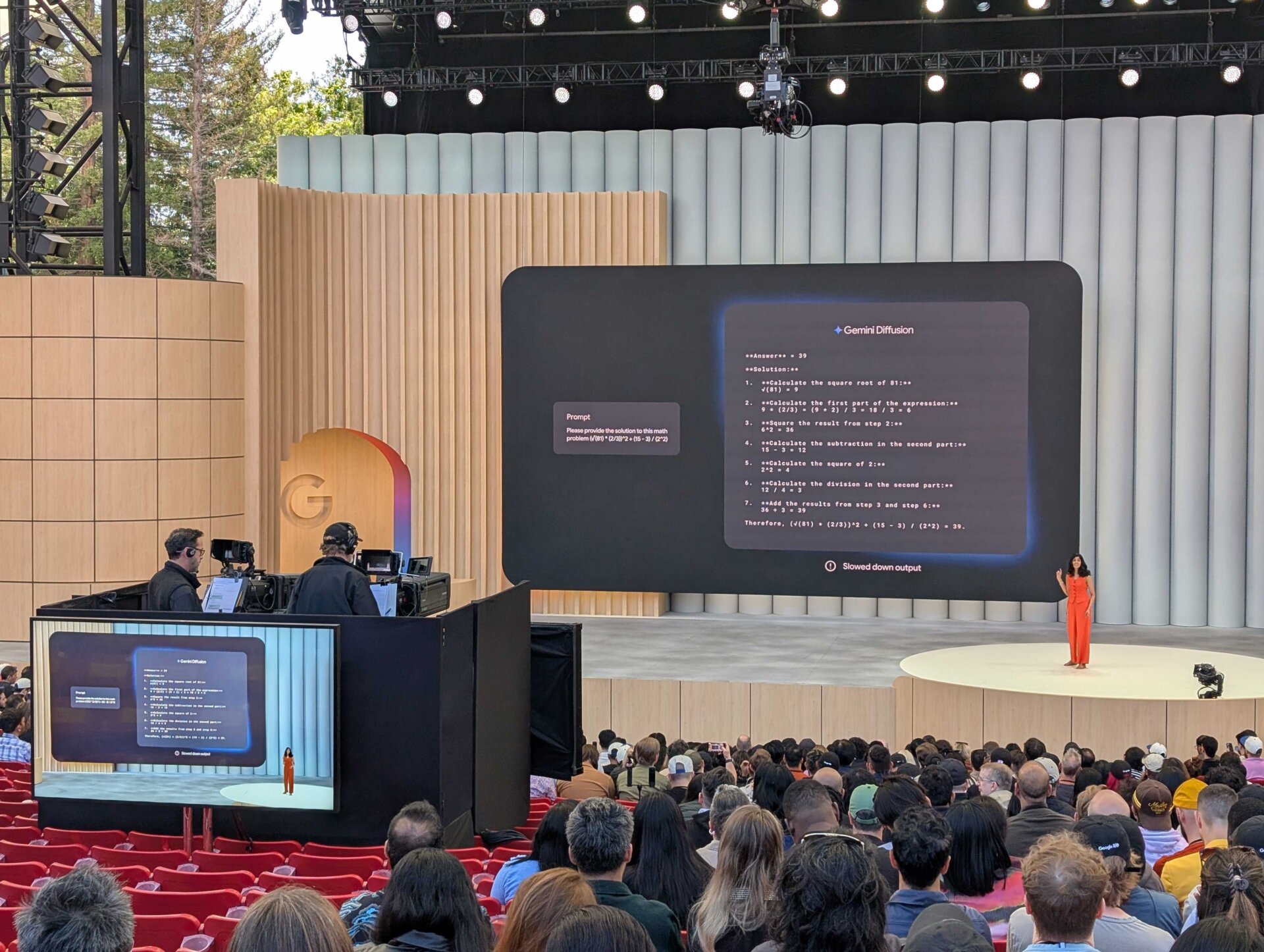

Gemini Diffusion AI Model Does to Text What Image Generators do for Photos

There are two major types of transformer AI used today. One is the LLM, AKA large language models, and diffusion models—which are mostly used for image generation. The Gemini Diffusion model blurs the lines of these types of models. Google said its new research model can iterate on a solution quickly and correct itself while generating an answer. For math or coding prompts, Gemini Diffusion can potentially output an entire response much faster than a typical chatbot. Unlike a traditional LLM model, which may take a few seconds to answer a question, Gemini Diffusion can create a response to a complex math equation in the blink of an eye and still share the steps it took to reach its conclusion. —Kyle Barr

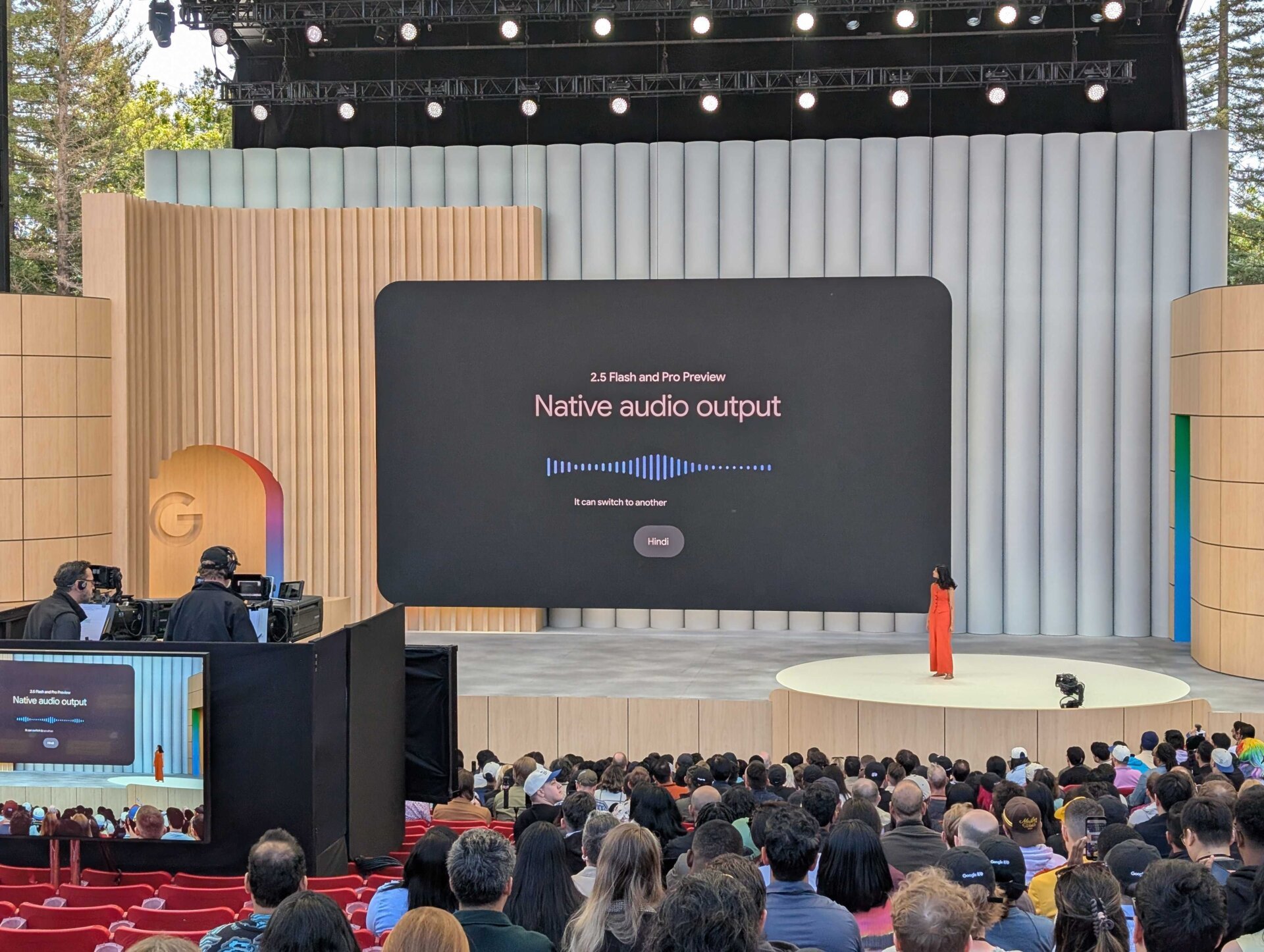

New Gemini 2.5 Flash Can Whisper?

New Gemini 2.5 Flash and Gemini Pro models are incoming and, naturally, Google says both are faster and more sophisticated across the board.

One of the improvements for Gemini 2.5 Flash is even more inflection when speaking. Unfortunately for my ears, Google demoed the new Flash speaking in a whisper that sent chills down my spine. —James Pero

Drink Whenever Somebody Says “Gemini” and “AI”

Is anybody keeping track of how many times Google execs have said “Gemini” and “AI” so far? Oops, I think I’m already drunk, and we’re only 20 minutes in. —Raymond Wong

Gemini is Getting Very Tired of Your S***

Google’s Project Astra is supposed to be getting much better at avoiding hallucinations, AKA when the AI makes stuff up. Project Astra’s vision and audio comprehension capabilities are supposed to be far better at knowing when you’re trying to trick it. In a video, Google showed how its Gemini Live AI wouldn’t buy your bullshit if you tell it that a garbage truck is a convertible, a lamppost is a skyscraper, or your shadow is some stalker. This should hopefully mean the AI doesn’t confidently lie to you as well.

Google CEO Sundar Pichai said, “Gemini is really good at telling you when you’re wrong.” These enhanced features should be rolling out today for Gemini app on iOS and Android. —Kyle Barr

Google Project Astra is the holy grail of an AI chatbot that is conversational and uses computer vision pic.twitter.com/3YVUuzUZM1

— Ray Wong (@raywongy) May 20, 2025

Release the Agents

Like pretty much every other AI player, Google is pursuing agentic AI in a big way. I’d prepare for a lot more talk about how Gemini can take tasks off your hands as the keynote progresses. —James Pero

Project Starline Is Now Google Beam

Google has finally moved Project Starline—its futuristic video-calling machine—into a commercial project called Google Beam. According to Pichai, Google Beam can take a 2D image and transform it into a 3D one, and will also incorporate live translate. —James Pero

Good News: Gemini Plays Pokemon

Google’s CEO, Sundar Pichai, says Google is shipping at a relentless pace, and to be honest, I tend to agree. There are tons of Gemini models out there already, even though it’s only been out for two years.

Probably my favorite milestone, though, is that it has now completed Pokémon Blue, earning all 8 badges according to Pichai. —James Pero

Let’s Do This

Buckle up, kiddos, it’s I/O time. Methinks there will be a lot to get to, so you may want to grab a snack now. —James Pero

The DJ Is Picking Up the Beats

Counting down until the keynote… only a few more minutes to go. The DJ just said AI is changing music and how it’s made. But don’t forget that we’re all here… in person. Will we all be wearing Android XR smart glasses next year? Mixed reality headsets? —Raymond Wong

Working Hard or Hardly Working?

Fun fact: I haven’t attended Google I/O in person since before Covid-19. The Wi-Fi is definitely stronger and more stable now. It’s so great to be back and covering for Gizmodo. Dream job, unlocked! —Raymond Wong

I Have Found Food and Coffee

Mini breakfast burritos… bagels… but these bagels can’t compare to real Made In New York City bagels with that authentic NY water 😏 —Raymond Wong

40 Minutes Until the Keynote

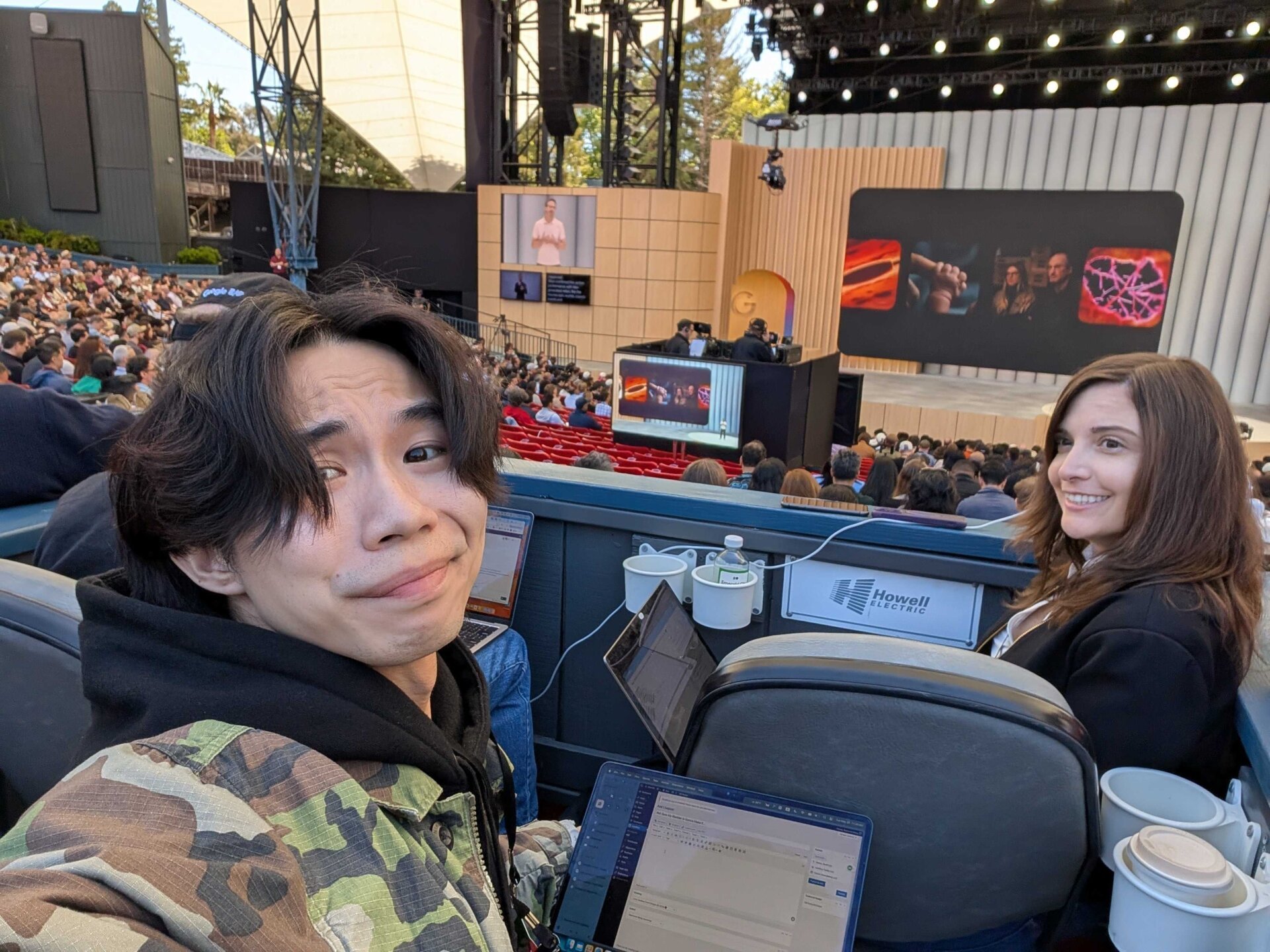

I’ve arrived at the Shoreline Amphitheatre in Mountain View, Calif., where the Google I/O keynote is taking place in 40 minutes. Seats are filling up. But first, must go check out the breakfast situation because my tummy is growling… —Raymond Wong

Should We Do a Giveaway?

Google I/O attendees get a special tote bag, a metal water bottle, a cap, and a cute sheet of stickers. I always end up donating this stuff to Goodwill during the holidays. A guy living in NYC with two cats only has so much room for tote bags and water bottles… Would be cool to do giveaway. Leave a comment to let us know if you’d be into that and I can pester top brass to make it happen 🤪 —Raymond Wong

Got My Press Badge!

In 13 hours, Google will blitz everyone with Gemini AI, Gemini AI, and tons more Gemini AI. Who’s ready for… Gemini AI? —Raymond Wong

Picked up my #GoogleIO press badge. Still doesn’t feel real that I’m reppin’ @Gizmodo. Someone pinch me! Anyway, follow us on Instagram where I’m doing a takeover of Stories, read our live blog, and check out our coverage starting tomorrow. Yeeeeeeeeeee! pic.twitter.com/crnnr3Gtg7

— Ray Wong (@raywongy) May 20, 2025

Google Glass: The Redux

Google is very obviously inching toward the release of some kind of smart glasses product for the first time since (gulp) Google Glass, and if I were a betting man, I’d say this one will have a much warmer reception than its forebearer. I’m not saying Google can snatch the crown from Meta and its Ray-Ban smart glasses right out of the gate, but if it plays its cards right, it could capitalize on the integration with its other hardware (hello, Pixel devices) in a big way. Meta may finally have a real competitor on its hands.

ICYMI: Here’s Google’s President of the Android Ecosystem, Sameer Samat, teasing some kind of smart glasses device in a recorded demo last week. —James Pero

Oh Hey, Didn’t See Ya There…

Hi folks, I’m James Pero, Gizmodo’s new Senior Writer. There’s a lot we have to get to with Google I/O, so I’ll keep this introduction short.

I like long walks on the beach, the wind in my nonexistent hair, and I’m really, really, looking forward to bringing you even more of the spicy, insightful, and entertaining coverage on consumer tech that Gizmodo is known for.

I’m starting my tenure here out hot with Google I/O, so make sure you check back here throughout the week to get those sweet, sweet blogs and commentary from me and Gizmodo’s Senior Consumer Tech Editor Raymond Wong. —James Pero

Ready for Google I/O 2025!

Hey everyone! Raymond Wong, senior editor in charge of Gizmodo’s consumer tech team, here! Landed in San Francisco (the sunrise was *chef’s kiss*), and I’ll be making my way over to Mountain View, California, later today to pick up my press badge and scope out the scene for tomorrow’s Google I/O keynote, which kicks off at 1 p.m. ET / 10 a.m. PT. Google I/O is a developer conference, but that doesn’t mean it’s news only for engineers. While there will be a lot of nerdy stuff that will have developers hollering, what Google announces—expect updates on Gemini AI, Android, and Android XR, to name a few headliners—will shape consumer products (hardware, software, and services) for the rest of this year and also the years to come. I/O is a glimpse at Google’s technology roadmap as AI weaves itself into the way we compute at our desks and on the go. This is going to be a fun live blog! —Raymond Wong